GeoLocator: a novel GeoAI tool making world travel without barriers

Presenters:

Shuju Sun

Yifan Yang

Daoyang Li

University of Southern California

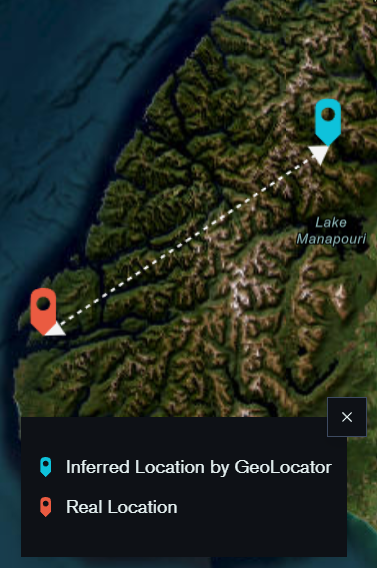

Geographic privacy, a crucial aspect of personal security, often goes unnoticed in daily activities. This paper addresses the underestimation of this privacy in the context of increasing online data sharing and the advancements in information gathering technologies. With the surge in the use of Large Multimodal Models, such as GPT-4, for Open Source Intelligence (OSINT), the potential risks associated with geographic privacy breaches have intensified. This study highlights the criticality of these developments, focusing on their implications for individual privacy. The primary objective is to demonstrate the capabilities of advanced AI tools, specifically a GPT-4 based model named “GeoLocator,” in inferring and potentially compromising geographic privacy through online shared content. We developed “GeoLocator” to infer geographic information from publicly available data sources. The study involved four experimental cases, each offering different perspectives on the tool’s application in inferring precise location data from partial images and social media content. The experiments revealed that “GeoLocator” could successfully infer specific geographic details, thereby exposing the vulnerabilities in current geo-privacy measures. These findings underscore the ease with which geographic information can be unintentionally disclosed. The paper concludes with a discussion on the broader implications of these findings for individuals and the community at large. It emphasizes the urgency for enhanced awareness and protective measures against geo-privacy leakage in the era of advanced AI and widespread social media usage.